AI Assurance: The Missing Pillar in Responsible AI Adoption

Published on: 2 June 2025

Author: Christian Sanderson

Introduction

Artificial Intelligence (AI) has rapidly transitioned from experimental use cases to core business infrastructure. Whether in financial services, healthcare, supply chains, or HR, AI systems now influence decisions that carry significant human, legal, and financial implications.

As organisations scale up their AI capabilities, a new priority is emerging: ensuring that AI systems are not just powerful—but trustworthy, fair, and safe. This is where AI Assurance comes in.

AI assurance provides the structure, scrutiny, and governance needed to instil confidence in AI systems. It allows stakeholders to evaluate whether AI tools are ethical, robust, transparent, and compliant. As regulatory momentum builds—particularly with the EU AI Act and the UK’s pro-innovation approach to AI regulation—the ability to demonstrate assurance will soon separate the leaders from the laggards.

This article explores what AI assurance is, why it matters now more than ever, and how organisations can embed it into their AI lifecycle.

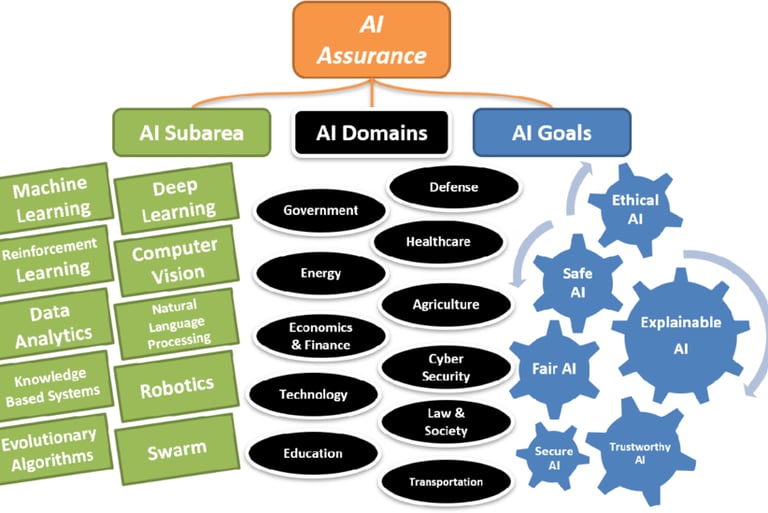

What is AI Assurance?

AI assurance refers to the practice of systematically assessing AI systems to ensure they operate as intended, without introducing undue risk or harm. It draws on methods from auditing, data science, ethics, compliance, and governance to ensure AI technologies are fit for purpose.

AI assurance encompasses a range of activities, including:

Evaluating bias, discrimination, and fairness

Testing model performance across use cases and demographics

Ensuring interpretability and transparency of outputs

Managing data quality and representativeness

Monitoring performance over time and detecting model drift

Aligning with regulatory and ethical requirements

It applies not only to the algorithms themselves, but also to the data they’re trained on, the processes surrounding their development, and the organisational controls in place to monitor them.

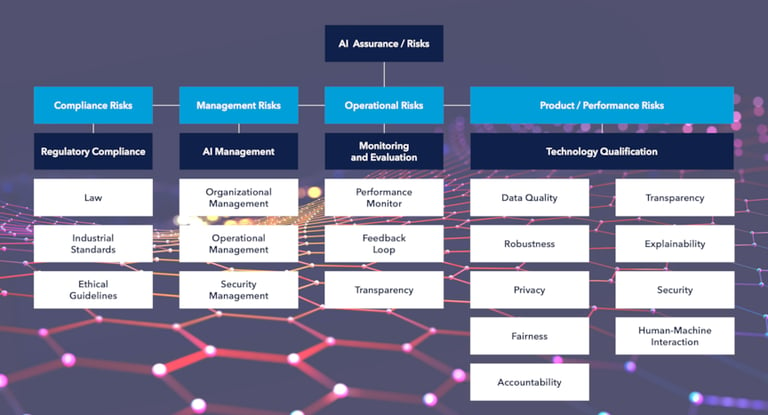

Why Organisations Need AI Assurance

Regulatory and Legal Compliance

AI regulations are evolving quickly. The EU AI Act is introducing classification tiers for AI risk, requiring varying degrees of testing, documentation, and human oversight. In the UK, the government is taking a decentralised but structured approach, pushing regulators to adopt common principles across sectors.

For businesses operating internationally, the ability to demonstrate AI assurance will be essential to comply with cross-border regulatory requirements. Failing to do so could result in significant penalties, litigation, or reputational damage.

Risk and Governance

AI systems can introduce unexpected risks. These include unfair outcomes, unintended biases, or decisions that are difficult to explain or audit. Particularly in sectors like finance, insurance, and healthcare, the consequences of these risks can be severe.

A strong AI assurance framework helps identify and mitigate these issues early—before they result in customer harm, regulatory breaches, or operational failure.

Trust and Adoption

Building trust in AI is critical to adoption—by users, employees, and customers alike. Transparent and verifiable AI systems are more likely to be embraced, while opaque or controversial systems often face resistance, especially where sensitive decisions (e.g., hiring or lending) are involved.

Assurance creates the conditions for AI to be adopted confidently and responsibly across the business.

The Pillars of AI Assurance

While the specifics may vary by organisation and sector, most mature AI assurance frameworks are built around four pillars:

1. Technical Assurance

Involves validating the model itself—its performance, fairness, robustness, and interpretability. This might include techniques such as fairness testing, adversarial resilience, and explainable AI tools.

2. Organisational Assurance

Governs how AI projects are managed. This includes decision-making accountability, board-level oversight, ethics committees, and staff training. It ensures that people—not just code—are in control.

3. Process Assurance

Focuses on the repeatability and auditability of AI development. It includes documentation standards, version control, testing frameworks, and model monitoring across time and geographies.

4. Independent or Third-Party Assurance

Some organisations are now turning to internal audit, external auditors, or third-party certification schemes to validate AI systems against industry standards. As regulatory requirements grow, external validation is expected to become more commonplace.

The UK and Global Landscape

The UK’s Centre for Data Ethics and Innovation (CDEI) has outlined an AI assurance roadmap, encouraging the development of tools, standards, and third-party validation mechanisms. Simultaneously, the National Institute of Standards and Technology (NIST) in the US has launched its AI Risk Management Framework, providing technical and ethical guidance.

Across the private sector, forward-thinking firms are already investing in AI assurance:

Financial institutions are embedding assurance into model risk governance frameworks

Healthcare providers are seeking explainability and safety validation before clinical deployment

Technology companies are trialling external audits and open-sourcing model documentation templates

The direction of travel is clear: AI assurance will soon be a default expectation, not a differentiator.

Getting Started with AI Assurance

Map Your AI Estate

Start by identifying all AI systems in use—particularly those that are high-impact, customer-facing, or decision-critical.Assess Risk and Prioritise

Classify systems by risk (e.g. high, medium, low) using internal criteria or regulatory guidance. High-risk systems should be prioritised for immediate assurance activities.Define Roles and Ownership

Establish clear responsibilities across technical, legal, risk, and governance functions. Develop policies that articulate accountability throughout the AI lifecycle.Choose Tools and Frameworks

Select metrics and tools that align with your use cases—whether assessing fairness, explainability, performance, or compliance.Embed and Operationalise

Build assurance into delivery pipelines. Move away from one-off reviews and towards continuous validation, monitoring, and governance.Consider External Validation

Explore external audits, certifications, or independent testing for high-risk or regulated use cases.

Conclusion

AI assurance is no longer a niche concept—it’s becoming a cornerstone of responsible and scalable AI adoption. As businesses accelerate their use of intelligent systems, trust, safety, and governance will define success far more than novelty or technical prowess alone.

Organisations that move early on assurance will be better equipped to navigate regulation, earn stakeholder confidence, and unlock sustainable competitive advantage through AI. Those that wait may find themselves constrained by legal, reputational, and operational risks they can no longer control.

Now is the time to lay the foundations for assured, accountable, and ethical AI.

A Personal Perspective from Christian sanderson

My interest in AI assurance stems from the intersection of two disciplines I’m deeply passionate about: financial crime compliance and responsible technology.

Having worked extensively in financial services, I’ve developed a keen understanding of risk management, regulatory compliance, and operational controls—particularly in high-stakes environments like fraud, AML, and sanctions. These domains require precision, governance, and evidence-based accountability—qualities that are equally vital in today’s AI-driven landscape.

Through my certifications and continued learning in AI, I’ve come to see AI assurance as a natural extension of the compliance mindset—translating established principles into a new technological frontier. I believe strongly that the future of AI lies not just in its capabilities, but in its credibility—and I’m committed to helping organisations navigate that future with confidence.

If you’re building out your AI governance capabilities or want to discuss how assurance can be integrated into your organisation’s AI strategy, I’d welcome the conversation.

Contact: